What happens when we have the same cost function for linear and logistic regression?

Please refer to below link

https://www.geeksforgeeks.org/ml-cost-function-in-logistic-regression/

The cost function tells us about the summary of the errors which the current model in making in predicting the test data.

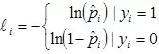

There is a loss function, which expresses how much the estimate has missed the mark for an individual observation. You could call this the “residual”. With logistic regression, the loss function is the log likelihood residual:

for the data set. I think the cost might be expressed as the average deviance per observation.