Q-learning is a different method of reinforcement learning that uses Q values for each state-action pair to signal the reward for pursuing a particular state path.

The generic Q-learning algorithm aims to learn rewards staged in a given environment. Taking actions for states until a goal state is reached is part of each state.

Actions are chosen probabilistically (as a function of the Q values) during learning, allowing exploration of the state-action space. When the objective state is reached, the process starts all over again, from the beginning.

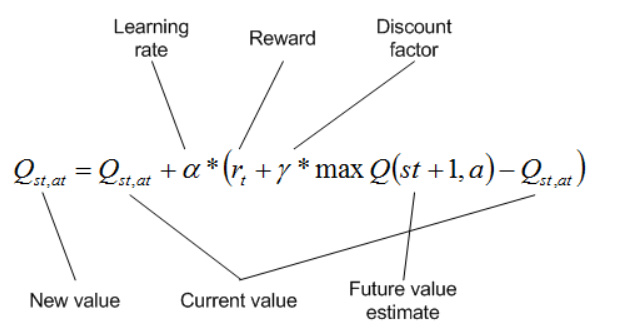

As the action is chosen for a particular state, Q values are updated for each state-action pair. The Q value for the state-action team is updated with the maximum Q value available for the new state achieved by applying the action to the existing form and some reward supplied by the move.

A learning rate, which defines how valuable fresh information is compared to old, further reduces this. The discount factor expresses the importance of long-term rewards over short-term rewards.

It’s worth noting that the environment could be brimming with negative and positive tips or that only the goal condition could signal a bonus.

This process is run repeatedly until the goal state is reached, allowing the Q values to be updated depending on each state’s probabilistic selection of actions.

When the task is finished, the Q values can be employed greedily to maximize the information obtained and reach the goal state as quickly as possible.

Other algorithms with distinct properties are included in reinforcement learning. State-action-reward-state-action is similar to Q-learning , except that the action is chosen based on probability rather than the maximum Q value.