Ensemble Learning is a process of accumulating multiple models to form a better prediction model. In Ensemble Learning the performance of the individual model contributes to the overall development in every step. There are two common techniques in this – Bagging and Boosting.

Bagging – In this the data set is split to perform parallel processing of models and results are accumulated based on performance to achieve better accuracy.

Boosting – This is a sequential technique in which a result from one model is passed to another model to reduce error at every step making it a better performance model.

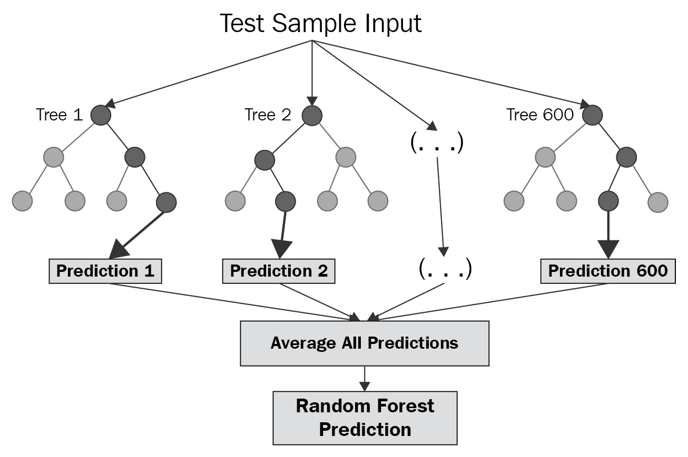

The most important example of Ensemble Learning is Random Forest Classifier. It takes multiple Decision Tree combined to form a better performance Random Forest model.