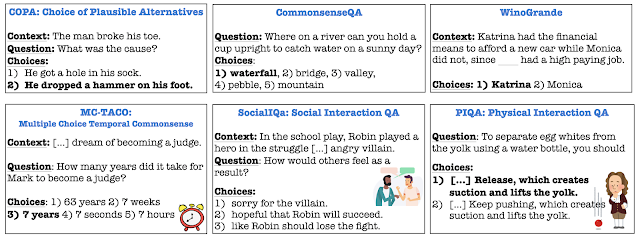

Multiple commonsense benchmarks have been released over the last few years. Some of them will be discussed here (see examples in Figure 6), along with the main differences and design choices when creating a benchmark.

Figure 6: Some commonsense benchmarks along with an example instance.

Type of knowledge: some benchmarks focus on a specific type of commonsense knowledge, such as social commonsense (e.g. Social IQa), physical commonsense (e.g. PIQA), temporal commonsense (e.g. MC-TACO), or causes and effects (e.g. COPA), while others target a broader domain of general commonsense knowledge and reasoning (e.g. WSC, WinoGrande, CommonsenseQA, ROCStories).

Size: most recent datasets include a large training set, in order to facilitate training large neural models. One way to create a benchmark is to hire experts to curate a high-quality dataset such as for WSC and COPA. These datasets are rather expensive to collect and are therefore typically small. The common alternative is to collect data through crowdsourcing or semi-automatically, and split it randomly to train, validation, and test sets. Models that learned data-specific shortcuts in the training set instead of generalized phenomena are likely to perform well on a test set drawn from the same distribution, but this performance is misleading and is likely a lot better than on real-world instances of the task. Despite this understanding, this is still the dominant approach.

Format: the vast majority of datasets are in the format of multiple choice questions, as exemplified in Figure 6. This format is the easiest to evaluate automatically: models are judged for their accuracy, i.e. what percent of the questions they answered correctly. Unfortunately, this type of tasks also makes it possible for a model to guess the correct answer. We’re not talking about a random guess, which would leave enough room for improvement. A random guess is expected to result in an accuracy of 100/k %, where k is the number of answer choices, e.g. 50% accuracy for binary tests, 33.3% for tests with 3 choices, 25% for 4 choices, etc. The risk is that the model makes an “educated guess” based on - yes, you guessed it correctly - spurious correlations between the questions and the correct/incorrect answers.

How do you make sure a model is right for the right reasons?

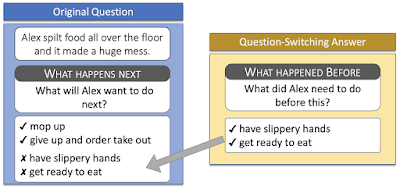

That’s the million-dollar question. We don’t have a perfect solution for this problem yet. For a start, when collecting a new benchmark, the process of collecting incorrect answers (=distractors) should be well-designed such that distractors are plausible but unlikely . Using random answers as distractors (e.g. naturally-occurring sentences or correct answers of different questions) would create topically-different distractors, which are easy to detect (remember, relatedness is one of the strengths of distributional text representations). Asking people to come up with the distractors may introduce other annotation artifacts, such as exaggerations, going off-topic, or producing overly emotional texts, which are easy for models to detect. Some solutions have been proposed: for example, the distractors in Social IQa are answers for different questions asked on the same context. In Figure 7, the context " Alex spilt food all over the floor

and it made a huge mess. " appears in the dataset with two questions: " what happens next? " and " what happened before? ". The distractors of " what happens next? " are the correct answers of " what happened before? ", e.g. that Alex has slippery hands. A similar approach is taken in CommonsenseQA.

Figure 7: Creating distractors for a Social IQa instance. Image credit: Maarten Sap.

An alternative solution is to filter out easy questions through “adversarial filtering”, i.e. training a weaker model and iteratively removing instances that it succeeds in answering. Variants of adversarial filtering were applied to WinoGrande and PIQA.

Finally, I believe the future is in generative tasks, in which the model needs to produce a free text answer without being provided with the candidate answers. Several recent benchmarks are generative, such as TimeTravel (counterfactual reasoning), ART (abductive reasoning), CommonGen, and ProtoQA. The challenge in generative tasks is the lack of reliable automatic evaluation metrics. Given the gold standard reference answer(s), we would like a metric to (1) reward correct generated answers that are different from the reference answer, while (2) penalizing incorrect answers that are similar (e.g. lexically) to the reference. Human evaluation is reliable, but it is costly and is typically done once on the test set. In order to be able to improve models during development, we need automatic metrics. We currently settle for metrics based on lexical overlap such as BLEU and ROUGE which are pretty terrible at (1) and have little correlation with human judgements, or model-based metrics such as BERT score that are not great at (2).