History of Hadoop

The Hadoop was started by Doug Cutting and Mike Cafarella in 2002. Its origin was the Google File System paper, published by Google.

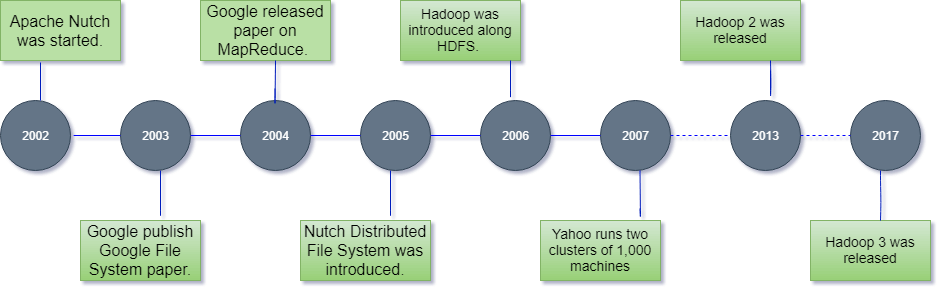

Let’s focus on the history of Hadoop in the following steps: -

- In 2002, Doug Cutting and Mike Cafarella started to work on a project, Apache Nutch. It is an open source web crawler software project.

- While working on Apache Nutch, they were dealing with big data. To store that data they have to spend a lot of costs which becomes the consequence of that project. This problem becomes one of the important reason for the emergence of Hadoop.

- In 2003, Google introduced a file system known as GFS (Google file system). It is a proprietary distributed file system developed to provide efficient access to data.

- In 2004, Google released a white paper on Map Reduce. This technique simplifies the data processing on large clusters.

- In 2005, Doug Cutting and Mike Cafarella introduced a new file system known as NDFS (Nutch Distributed File System). This file system also includes Map reduce.

- In 2006, Doug Cutting quit Google and joined Yahoo. On the basis of the Nutch project, Dough Cutting introduces a new project Hadoop with a file system known as HDFS (Hadoop Distributed File System). Hadoop first version 0.1.0 released in this year.

- Doug Cutting gave named his project Hadoop after his son’s toy elephant.

- In 2007, Yahoo runs two clusters of 1000 machines.

- In 2008, Hadoop became the fastest system to sort 1 terabyte of data on a 900 node cluster within 209 seconds.

- In 2013, Hadoop 2.2 was released.

- In 2017, Hadoop 3.0 was released.

| Year | Event |

|---|---|

| 2003 | Google released the paper, Google File System (GFS). |

| 2004 | Google released a white paper on Map Reduce. |

| 2006 |

|

| 2007 |

|

| 2008 |

|

| 2009 |

|

| 2010 |

|

| 2011 |

|

| 2012 | Apache Hadoop 1.0 version released. |

| 2013 | Apache Hadoop 2.2 version released. |

| 2014 | Apache Hadoop 2.6 version released. |

| 2015 | Apache Hadoop 2.7 version released. |

| 2017 | Apache Hadoop 3.0 version released. |

| 2018 | Apache Hadoop 3.1 version released. |