Back-Propagation Algorithm also called “backpropagation,” or simply “backprop,” is an algorithm for calculating the gradient of a loss function with respect to variables of a model.

- Back-Propagation: Algorithm for calculating the gradient of a loss function with respect to variables of a model.

You may recall from calculus that the first-order derivative of a function for a specific value of an input variable is the rate of change or curvature of the function for that input. When we have multiple input variables for a function, they form a vector and the vector of first-order derivatives (partial derivatives) is called the gradient (i.e. vector calculus).

- Gradient: Vector of partial derivatives of specific input values with respect to a target function.

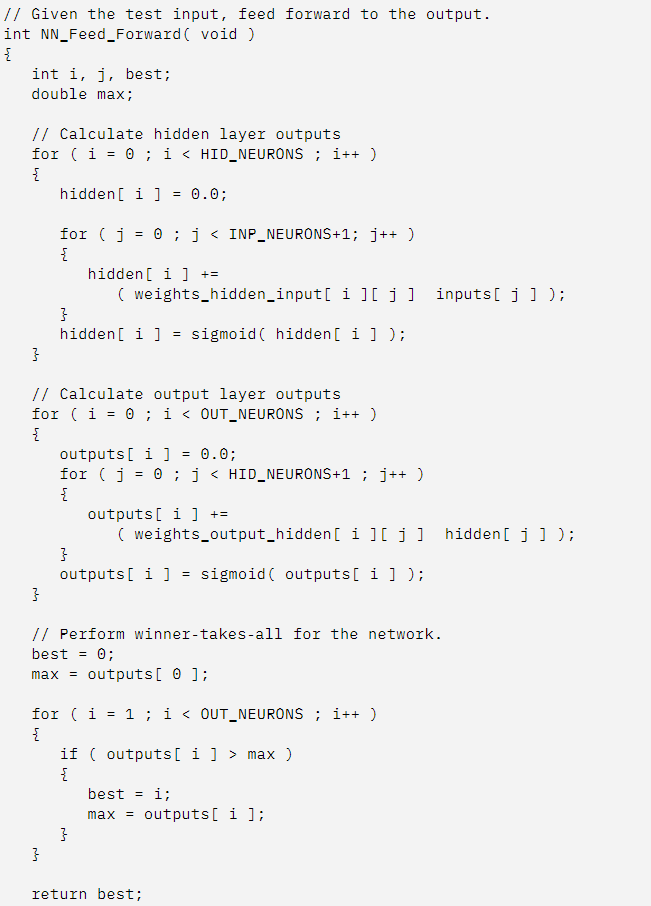

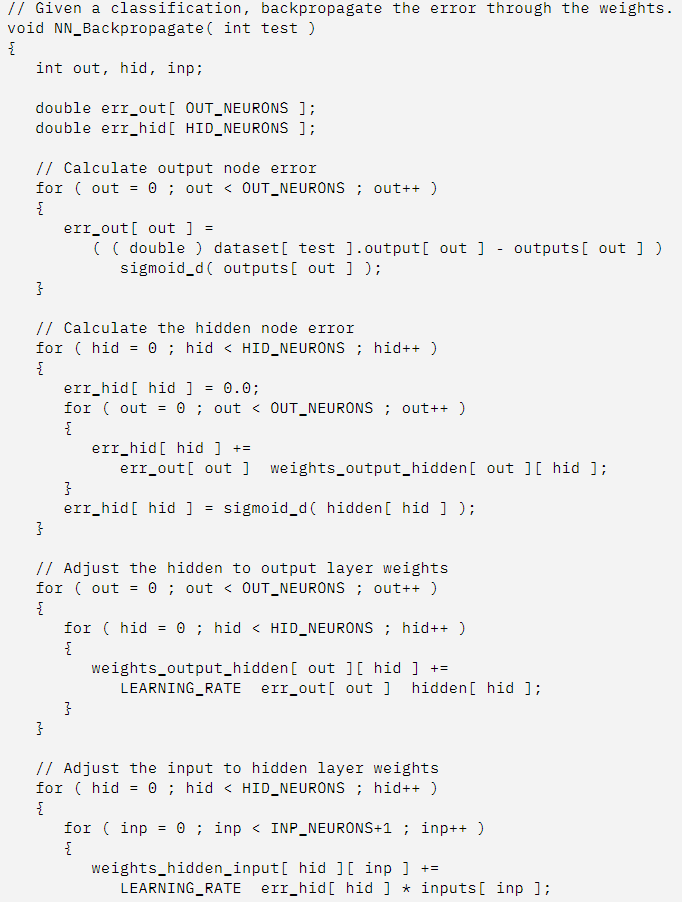

Back-propagation is used when training neural network models to calculate the gradient for each weight in the network model. The gradient is then used by an optimization algorithm to update the model weights.

The algorithm was developed explicitly for calculating the gradients of variables in graph structures working backward from the output of the graph toward the input of the graph, propagating the error in the predicted output that is used to calculate gradient for each variable.

The back-propagation algorithm, often simply called backprop, allows the information from the cost to then flow backwards through the network, in order to compute the gradient.

— Page 204, Deep Learning, 2016.

The loss function represents the error of the model or error function, the weights are the variables for the function, and the gradients of the error function with regard to the weights are therefore referred to as error gradients.

- Error Function: Loss function that is minimized when training a neural network.

- Weights: Parameters of the network taken as input values to the loss function.

- Error Gradients: First-order derivatives of the loss function with regard to the parameters.

This gives the algorithm its name “back-propagation,” or sometimes “error back-propagation” or the “back-propagation of error.”

- Back-Propagation of Error: Comment on how gradients are calculated recursively backward through the network graph starting at the output layer.

The algorithm involves the recursive application of the chain rule from calculus (different from the chain rule from probability) that is used to calculate the derivative of a sub-function given the derivative of the parent function for which the derivative is known.

The chain rule of calculus […] is used to compute the derivatives of functions formed by composing other functions whose derivatives are known. Back-propagation is an algorithm that computes the chain rule, with a specific order of operations that is highly efficient.

— Page 205, Deep Learning, 2016.

- Chain Rule: Calculus formula for calculating the derivatives of functions using related functions whose derivatives are known.

There are other algorithms for calculating the chain rule, but the back-propagation algorithm is an efficient algorithm for the specific graph structured using a neural network.

It is fair to call the back-propagation algorithm a type of automatic differentiation algorithm and it belongs to a class of differentiation techniques called reverse accumulation.

The back-propagation algorithm described here is only one approach to automatic differentiation. It is a special case of a broader class of techniques called reverse mode accumulation.

— Page 222, Deep Learning, 2016.

Although Back-propagation was developed to train neural network models, both the back-propagation algorithm specifically and the chain-rule formula that it implements efficiently can be used more generally to calculate derivatives of functions.

SQL

SQL

HTML/CSS/JS

HTML/CSS/JS

Coding

Coding

Settings

Settings Logout

Logout