What Is Dropout and Batch Normalization

Batch normalization and dropout act as a regularizer to overcome the overfitting problems in a Deep Learning model.

Batch Normalization: after every batch, the output of all neurons is normalized to zero-mean and unit variance. It makes the network significantly more robust to bad initialization.

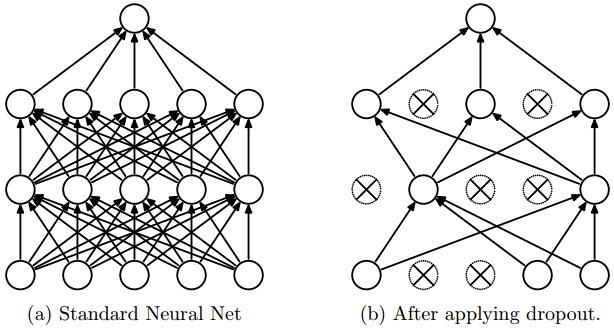

Dropout: randomly select neurons and set their output value to zero. You can think about it as regularization technique that prevent neural networks from overfitting by removing some neurons randomly.

Just wanted to add a further point on batch normalization. As the name suggests it’s “batch”, and never a rolling mean. This could blow up the model learning process altogether if you encounter extreme values right at the initial stages of the model learning process.

Another caution to exercise batch normalization is to avoid extremely small batch sizes. So if the batch distribution and training dataset distribution is highly mismatched, you’ll have to do batch renormalization.

SQL

SQL

HTML/CSS/JS

HTML/CSS/JS

Coding

Coding

Settings

Settings Logout

Logout