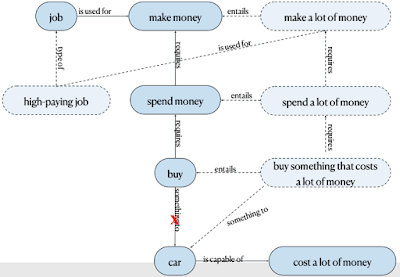

The knowledge in commonsense resources may enhance models built for solving commonsense benchmarks. For example, we can extract from ConceptNet the assertions that job is used for making money, that spending money requires making money, that buying requires spending money, and that car is something you can buy. Ideally we would also need the knowledge that a high-paying job is a type of job, specifically one used for making a lot of money, which is required for spending a lot of money, which is required for buying something that costs a lot of money, car being one of them. Finally, we may want to remove the edge from “buy” to “car” so we can only get to “car” from the node “buy something that costs a lot of money”.

Figure 12: Knowledge extracted from ConceptNet for the WinoGrande instance discussed above.

How do we incorporate knowledge from knowledge resources into a neural model?

The simple recipe (success not guaranteed) calls for 4 ingredients: the task addressed, the knowledge resource used, the neural component, and the combination method. We have already discussed tasks and knowledge resources, so I would only add here that ConceptNet is the main resource utilized for downstream models, although some models incorporate other knowledge sources, such as other knowledge bases (WordNet, ATOMIC), knowledge mined from text, and tools (knowledge base embeddings, sentiment analysis models, COMET)

The neural component is the shiny new neural architecture - language models in the last 3 years, biLSTMs in the years prior, etc. The more interesting component is the combination method. We will look at 3 examples:

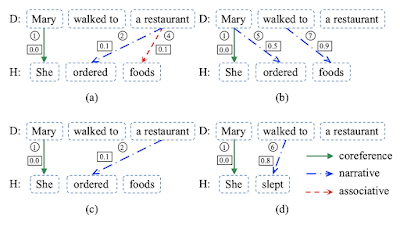

Incorporating into the scoring function: Lin et al. (2017) extracted probabilistic “rules” connecting pairs of terms from multiple sources such as WordNet (restaurant→eatery: 1.0), Wikipedia categories (restaurant→business: 1.0), script knowledge mined from text (X went to a restaurant→X ate: 0.32), word embedding-based relatedness scores (restaurant→food: 0.71), and more. The model scores each candidate answer according to the scores of the inference rules used to get from the context (e.g. " Mary walked to a restaurant " in Figure 14) to the candidate answer (e.g. " She ordered foods. ").

Figure 14: “covering” each candidate answer by the original context and the rules extracted from various sources. Image credit: Lin et al. (2017).

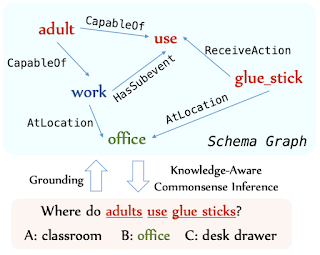

Representing symbolic knowledge as vectors: Lin et al. (2019) used BERT as the neural component to represent the instance (statement vector). For their symbolic component, they extracted subgraphs from ConceptNet pertaining to concepts mentioned in the instance and learned to represent them as a vector (graph vector). These two vectors were provided as input to the answer scorer which was trained to predict the correct answer choice.

Figure 15: extracting subgraphs from ConceptNet pertaining to concepts mentioned in the instance. Image credit: Lin et al. (2019).

Multi-task learning: Xia et al. (2019) fine-tuned a BERT model to solve the multiple choice questions. They also trained two auxiliary tasks supervised by ConceptNet, in which two concepts were given as input and the classifier had to predict whether they are related or not, and the specific ConceptNet property that connects them. The BERT model was shared between the main and the auxiliary tasks, so that commonsense knowledge from ConceptNet was instilled into BERT, improving its performance on the main task.

Figure 16: multi-task learning aimed at instilling knowledge from ConceptNet into BERT.

SQL

SQL

HTML/CSS/JS

HTML/CSS/JS

Coding

Coding

Settings

Settings Logout

Logout